Unified communications systems today are becoming increasingly more complex. The speed with which emerging technologies are evolving is mind-boggling, and the level of complexity can vary greatly, depending on the organization and industry. But one thing is certain. You must regularly conduct performance testing on your technology tools or risk downtime and even complete system failure.

With UCC environments prone to regular change, like ongoing software and systems upgrades, additions and improvements, it's no wonder that remote working has added a whole other level of intricacy with virtual users and given rise to a new industry term - 'Performance Engineering'.

The worldwide evolution of unified communications underscores the need for performance testing tools and performance monitoring to report when changes affect the overall experience for users and customers. Any organization's UC and contact center system always depends on peak performance. Performance testing considers all performance acceptance criteria, benchmarks and standards when a system is stressed or at risk of overloading.

Find out how to test your entire technology ecosystem to further streamline and grow your business by downloading our guide.

Find out how to test your entire technology ecosystem to further streamline and grow your business by downloading our guide.

What exactly is performance testing?

Performance testing is essentially a software testing process that tests, measures and monitors key performance metrics relating to a network's software applications under a specific workload, such as:

-

Speed

-

Reliability

-

Response time

-

Stability

-

Scalability

-

Usage of resources

How is performance engineering different to performance testing?

Performance software testing is a subcategory of performance engineering.

Performance engineering is a hands-on, pre-emptive approach to software development. Developers and users can ensure frictionless collaboration between various teams and processes by identifying and mitigating performance issues early in the software development cycle.

Under the wider umbrella of performance engineering, it’s not only testers who face accountability for software development quality assurance but also DevOps teams, performance engineers, product owners, and business analysts.

Performance testing provides developers and system managers with the diagnostic information they need to eliminate performance bottlenecks and to ensure that any new system component conforms to the specified performance criteria. To ensure business-critical apps perform seamlessly, it is essential to measure performance testing metrics.

Find out why contact center performance testing is crucial. Read our blog.

Why should I conduct a performance test?

Testing your organization's unified communications and contact center ecosystems provides performance data critical to delivering quality, consistent end-user and customer experience.

Performance tests will help ensure your software meets the expected levels of service and provides a positive user experience, and with the right testing tools, will highlight improvements you should make to your applications relative to speed, stability, and scalability before they go into production.

Any software application's adoption, success, and productivity depend directly on properly implemented performance testing. But first, let's clarify the categories of performance testing and how this software testing relates to this guide - mainly concerned with non-functional tests.

What types of things will performance testing reveal?

Organizations run performance testing metrics for their web and mobile applications for one or more of the following reasons:

-

To find out where and when computing bottlenecks are occurring within an application.

-

To determine whether the application satisfies key performance indicators (for example, if the system can handle up to 1,000 concurrent users).

-

To verify whether the performance levels a software vendor claims are what they're supposed to be.

-

To compare two or more systems and identify the one that performs best.

-

To measure stability under peak traffic event

Find out more about Cloud Performance Testing by downloading our guide.

Functional testing and non-functional testing - what's the difference?

Functional tests

Functional performance tests verify that each function of the software application operates in conformance with the requirement specification, and it is not concerned with the application's source code.

Every functionality of the system is tested by providing appropriate input, verifying the output and comparing the actual results with the expected results. This testing involves checking the User Interface, APIs, Database, security, client/ server applications and functionality of the Application Under Test. This type of testing can be done either manually or using automation.

Non-functional tests

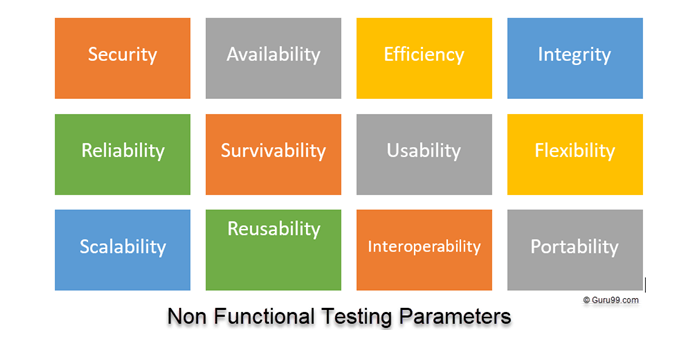

Non-functional performance tests check aspects like usability, reliability, flexibility, interoperability etc that are related to a software application. Non-functional performance tests are explicitly designed to test the readiness of a system as per non-functional parameters, which are never addressed by functional tests.

A good example of non-functional performance tests would be to check how many people can simultaneously log into a software application.

Non-functional tests are equally important to functional tests and affect client and user satisfaction.

Image source: Guru 99

Let's look at some of the key non-functional test parameters.

1) Security

The parameter defines how a system is safeguarded against deliberate and sudden attacks from internal and external sources. The main goal of Security Testing is to identify potential threats and measure a system's vulnerabilities so the threats can be encountered. The system does not stop functioning or can not be exploited. Security testing also helps detect all possible security risks in the system, allowing developers to fix the problems through coding.

2) Reliability

The extent to which any software system continuously performs the specified functions without failure. Reliability Testing checks whether the software can perform a failure-free operation for a specified period in a particular environment. Reliability testing ensures the software product is bug-free and reliable enough for its expected purpose.

3) Survivability

Recovery Testing verifies software's ability to recover from software/hardware crashes, network failures, etc. Recovery Testing aims to determine whether software operations can continue after a disaster or integrity loss. Recovery testing involves reverting software to the point where integrity was known and reprocessing transactions to the failure point.

4) Availability

The parameter determines the degree to which the user can depend on the system during its operation. Stability Testing is done to check the efficiency of a developed product beyond a particular workload capacity, often to a breaking point. Stability testing is also referred to as load testing or endurance testing.

5) Usability

The ease with which the user can learn, operate, and prepare inputs and outputs through interaction with a system. It measures how end-users use software applications and exposes usability defects.

6) Scalability

Scalability Testing measures the performance of a system or network under maximum user load or when the number of user requests is scaled up or down. It also helps ensure that the system can handle projected increase in user traffic, data volume, transaction counts frequency, etc., and it tests a system's ability to meet evolving needs.

7) Interoperability:

Interoperability tests aim to ensure that the software product can communicate with other components or devices without any compatibility issues.

In other words, interoperability testing aims to determine end-to-end functionality between two communicating systems as specified by the requirements. For example, interoperability testing is done between smartphones and tablets to check data transfer via Bluetooth.

Let's further break down some parameters in the performance testing process.

Find out about the pros and cons of cloud vs. on-premise performance testing. Read our guide.

Types of performance tests

Before conducting performance testing, it's important to identify the testing environment so that you can clearly understand your hardware, software and network configurations. By becoming completely familiar with the test environment, you'll have a head start on flagging any problems that might occur throughout the process. Additionally, you'll be better equipped to identify performance testing challenges and equally to identify project success criteria.

You'll also need to re-create a testing environment that mirrors the production ecosystem as closely as possible for accurate test results. If you don't do this, the test results may falsely represent an application's performance when it finally goes live.

Image source: Celestial Systems

Stress testing

Stress testing is a type of performance test that checks the upper limits of your system by testing it under extreme loads. Stress tests monitor how the system behaves under intense loads and how it recovers when returning to normal usage. It checks that KPIs like throughput and response time are the same as before load spikes. Stress testing tools also look for memory leaks, slowdowns, security issues, and data corruption.

What can you measure with a stress test?

Depending on the application, software, or technology being used in your environment/system, what's measured during a stress test can vary. Still, some of the metrics include overall performance issues, unexpected traffic load spikes, memory leaks, bottlenecks and more:

-

Response times. Stress testing can indicate how long it takes to receive a response after a request is sent.

-

Hardware constraints. This measures CPU usage, RAM, and disk I/O. If response times are delayed or slow, these hardware components could be potentially to blame.

-

Throughput. How much data is being sent or received during the stress test based on bandwidth levels.

-

Database reads and writes. Stress tests can indicate which system or unit is causing a bottleneck if your application utilises multiple systems.

-

Open database connections. Large databases can severely impact performance, slowing response times.

-

Third-party content. Web pages and applications rely on many third-party components. Stress testing will show you which ones may impact your page or application's performance.

Load testing

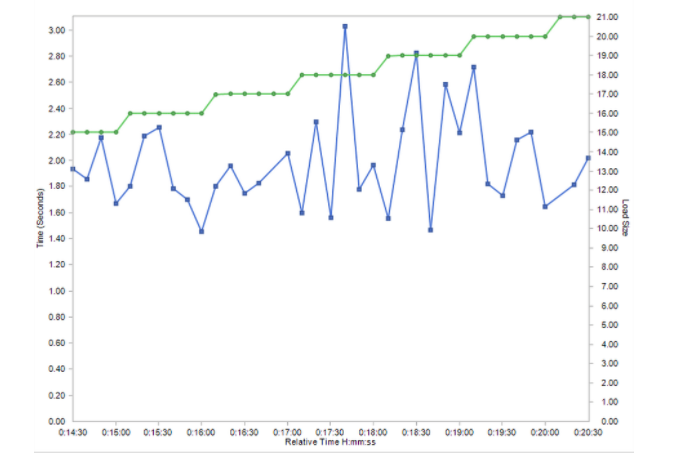

Load testing tools ensure that a network system can handle an expected traffic volume or load limit as a workload increases. In other words, a load test measures system performance when bombarded with specific levels of simultaneous requests. Load tests are sometimes referred to as volume tests.

Load tests aim to prove that a system can handle its load limit with minimal to acceptable performance degradation. Before carrying out a load test, testers need to:

-

Create a test environment that closely reflects the production environment regarding hardware, software specifications, network, etc.

-

Create load test scenarios: A test scenario combines test data showing scripts and virtual users executed during a testing session.

The example in the graph below shows a load of 20 users, testing to ensure the page time does not exceed 3.5 seconds.

Spike testing

Just as a stress test is a type of performance testing, there are types of load testing as well. If your stress test includes a sudden, high ramp-up in the number of virtual users, it is called a spike test. Spike testing aims to see how your system performs in an unexpected rise and fall in the number of users. In performance engineering, spike tests help determine how much system performance deterioration occurs during a sudden high load.

Another goal of Spike Testing is to determine the recovery time. Between two successive spikes of user load, the system needs some time to stabilize. This recovery time should be as low as possible.

How to do spike tests

1) Determine the load capacity of your software application

2) Prepare the test environment and configure it to record performance parameters based on acceptable performance criteria

3) Define expected load by applying maximum load to your software application using your performance test tools

4) Rapidly increase the load for a set period

5) Set the load back to its original level

6) Analyze the results

Soak tests

Stress testing over a long period to check the system's sustainability is called a soak test. Soak testing is sometimes referred to as endurance, capacity, or longevity testing and involves testing the system to detect performance-related issues such as stability and response time.

The system is then evaluated, and resource usage is checked to see whether it could perform well under a significant load for an extended period. This type of performance testing measures its reaction and analyzes its behavior under sustained use.

Scalability testing

Scalability tests determine if the software is effectively handling increasing workloads. This can be determined by gradually adding to the user load or data volume while monitoring system performance and resource usage. Also, the workload may stay at the same level while resources such as CPUs and memory are changed.

Volume testing

Volume testing determines how efficiently software performs in a production environment with large projected amounts of data. It is also known as flood testing because the test floods the system with data.

Configuration Testing

Configuration testing tests multiple combinations of software and hardware to evaluate the functional requirements and determine optimal configurations under which the software application works without any flaws or defects.

Likely problems encountered during performance testing

In a performance testing environment, developers are looking for specific issues that can impair system performance:

-

Speed issues — slow responses and long load times, for example, are often found in a test environment and addressed.

-

Bottlenecking occurs when data flow is interrupted or halted because there is not enough capacity to handle the workload.

-

Poor scalability — If software cannot handle the desired number of concurrent tasks, results could be delayed, errors could increase, or other unexpected behavior could happen that affects:

-

Disk usage

-

CPU usage

-

Memory usage

-

Operating system limitations

-

Network configurations

-

Software configuration issues — Often, settings are not set sufficiently to handle the workload.

-

Insufficient hardware resources — Performance testing may reveal physical memory constraints or low-performing CPUs.

Performance testing tools & best practices

Firstly, the right performance testing tool is critical for the types of performance testing you want to carry out, for example, identifying performance bottlenecks.

Perhaps the most important tip for performance testing is testing early and testing often. A single test won't be sufficient to give developers all the information and details they need. Successful performance testing is a collection of repeated and smaller tests:

-

Run performance tests as early as possible in development. Don't wait and rush performance testing as the project winds down.

-

Build test cases and test scripts around performance metrics. These metrics enable test engineers or UAT teams to:

-

Identify the current performance of the application, infrastructure, and network

-

Compare performance testing results and analyze the impact of things like code changes

-

Make informed decisions regarding improving the quality of software

-

-

Performance testing isn't just for completed projects. There is value in testing individual units or modules.

-

Conduct multiple performance tests to ensure consistent findings and determine metrics averages.

-

Applications often involve multiple systems, such as databases, servers, and services. Performance test the individual units separately as well as together.

What if I don't do performance tests?

When nonfunctional performance testing is overlooked, performance and UX defects can leave users with a bad experience and cause brand damage. Worse, without a performance test, applications could crash with an unexpectedly increased number of users. Also, accessibility defects can result in compliance fines. And your security could be at risk.

With IR Collaborate's advanced performance testing tools, you can have confidence in your voice, web and video. Using our software performance testing solutions, you can identify the gaps between your assumptions and actual system performance and get the real-time insights you need to deliver service that exceeds customer expectations.

For more information on cloud performance testing, read our blog Cloud Performance Testing Tips and Tricks.

Contact us to find out more about the performance testing process and how our testing solutions reduce risks in your organization, as well as improve customer and user experience.

Find out how to test your entire technology ecosystem to further streamline and grow your business by downloading our guide.

Find out how to test your entire technology ecosystem to further streamline and grow your business by downloading our guide.